Statistical modeling applies statistical methods to real-world data to give empirical content to relationships. It aims to quantify phenomena and develop models and test hypotheses, making it a crucial field for economic research, policy analysis, and decision-making. The aim of the statistical modeling is to study the (unknown) mechanism that generates the data, i.e., the Data Generating Process (DGP). The statistical model is a function that approximates the DGP.

The matrix of data

Let’s consider realizations defining a sample for . Suppose we have dependent variables and explanatory variables (also known as predictors). The data matrix of the exogenous (regressors) is defined as in Equation 13.1, while the matrix composed by the endogenous (dependent) variables reads Hence, the complete matrix of data reads In general, when then the model has only one equation to satisfy for , for example Otherwise, when there are more than one dependent variable and the model is composed by -equations for , i.e. the same linear model with equations reads: Thus the matrix of the residuals components reads

Joint, conditional and marginals

Let’s consider the bi-dimensional random vector in Equation 14.1 and let’s write the joint distribution of and , i.e. In the continuous case, there exists a joint density such that: Moreover, from the joint distribution (Equation 14.4) it is possible to recover the marginals distributions, i.e.

Then, given the marginals (Equation 14.6), it is possible to compute the unconditional moments, for example

- First moment: .

- Second moment: .

Applying the Bayes theorem (Theorem 6.2), from the joint distribution (Equation 14.4) it is possible to recover the conditional distribution, i.e

Given the conditional distribution the conditional moments reads

Example 14.1 Let’s consider a multivariate Gaussian setup, i.e. If are jointly normal, then the marginals are multivariate normal, i.e. and also the conditionals distributions, i.e. In such model’s setup the conditional expectation of given reads and the conditional variance as In this setup the parameters are:

- Joint distribution, .

- Conditional distribution, .

- Marginal distribution, .

Noting that is a function of , i.e. in the Gaussian case it is possible to prove that and are free to vary. Hence, imposing restrictions on do not impose restrictions on . In general, if the parameters of interest are a function of the conditional distribution and and are free to vary, then the inference can be done without losing of information considering the conditional model. In this case we say that is weakly exogenous for .

Conditional expectation model

Let’s consider a very general conditional expectation model with , of which the linear models are a special case. In matrix notation it can be written as: where the conditional expectation errors are defined as:

Proposition 14.1 In a conditional expectation model as in Equation 14.8, the residuals , defined as in Equation 14.9, have unconditional expectation and covariance with the regressors equal to zero, i.e. Moreover, the conditional expectation error is orthogonal to any transformation of the conditioning variables, i.e.

Proof. Let’s start the unconditional expectation of the residuals defined in Equation 14.8, i.e. Then, let’s compute the expected value of between the residuals and the regressors, i.e. For simplicity let’s assume that can takes only values in . Applying the tower property of conditional expectation one obtain: Then, let’s substitute from Equation 14.8 and with , i.e. For a general transformation of the regressors as in Equation 14.10, the covariance is computed as:

Uniequational linear models

Let’s consider an uni-equational linear model, i.e. with in (Equation 14.2), is expressed in compact matrix notation as: where and represent the true parameters and residuals in population. Let’s consider a sample of -observations extracted from a population, then the matrix of the regressors reads as in Equation 13.1, while the vectors of dependent variable and of the residuals reads Hence, the matrix of data is composed by:

Estimators of b

In general, the true population parameter is unknown and lives in the parameter space, i.e. . In the following section we will define with the estimator function while will denote one of its possible estimates.

Depending on the specification of the model, the function takes as input the matrix of data and returns as output a vector of estimates, i.e. In this context, the fitted values can be seen as function of the estimate , i.e. Consequently, the fitted residuals , which measure the discrepancies between the observed and the fitted values, are also a function of , i.e.

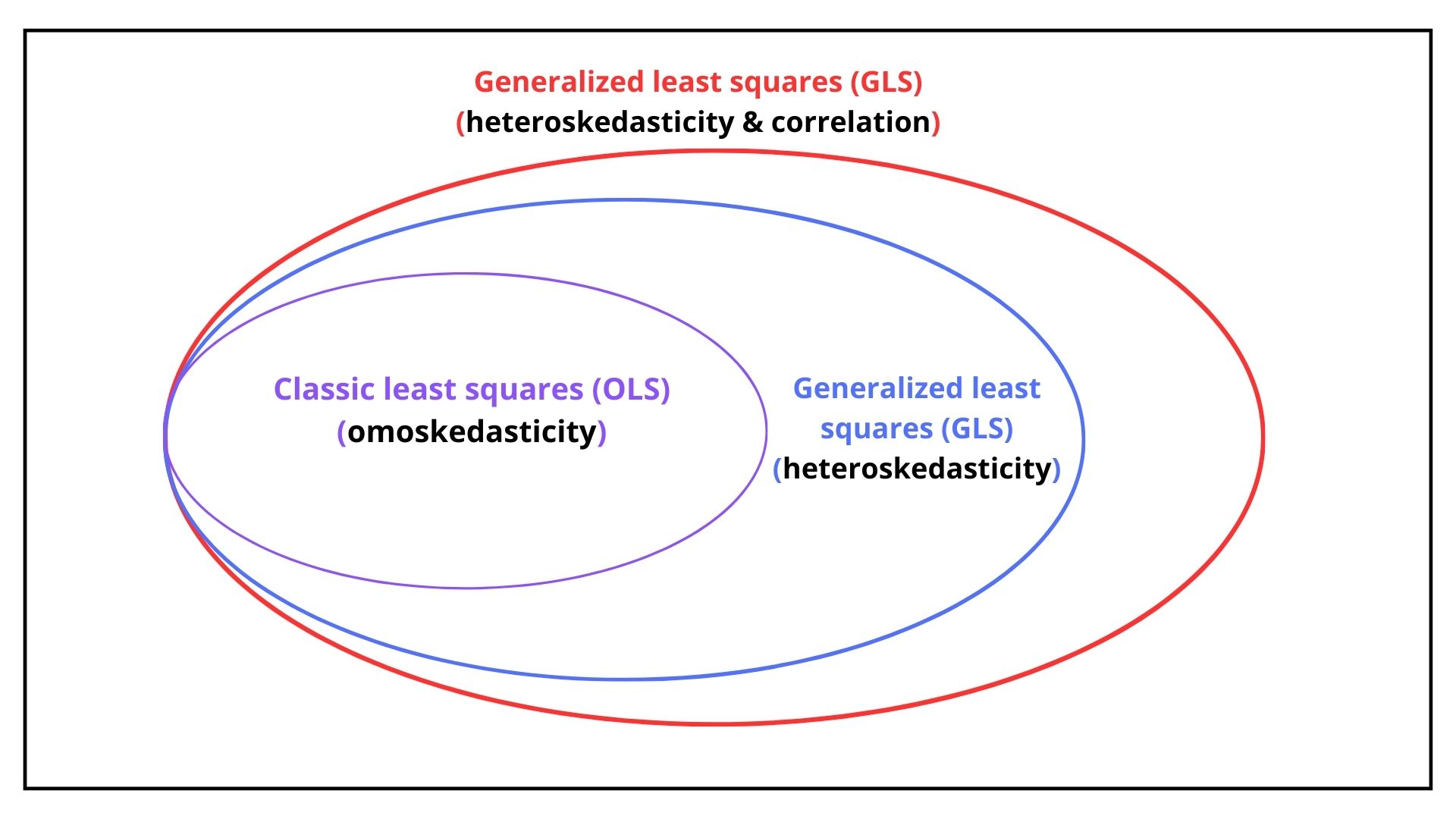

As we will see in Chapter 15, the assumptions on the variance of the residuals determines the optimal estimator of . In general, the residuals could be

- omoskedastic: the residuals are uncorrelated and their variance is equal for each observation.

- heteroskedastic: the residuals are uncorrelated and their variance is difference for each observation.

- autocorrelated: the residuals are correlated and their variance is equal and their variance is difference for each observation.

As shown in Figure 14.1, depending on the assumption (1,2 or 3) the optimal estimator of is obtained with Ordinary Last Square for case 1, while Generalized Last Square for case 2 and 3.

For example, if the residuals are correlated, then their conditional variance-covariance matrix reads or more explicitly,

Since the matrix is symmetric the number of distinct elements above (or below) the diagonal reads Hence, given that the number of elements of is , the number of unique values (free elements) are given by the variances and plus covariances, i.e.